This year again a small delegation of Compass Security was present at Insomni’hack in Geneva. On the novelties this year, the workshops spanned over two days (Tuesday and Wednesday) and the conference followed the same direction (Thursday and Friday). There was also a new kind of CTF, labeled blue-team CTF, called Boss of the SOC. Although we did not take part in this we salute this initiative to have offense and defense meet under the same roof. This blog post is giving a quick summary of some talks that I attended.

Keynote, Take or Buy: Internet criminals domain names needs and what registries can do against it, Michael Hausding

This first talk started nice and slow by coming back on the fundamentals of DNS, what is it, why is it useful and how it works viewed from the registry point of view. It then took some time to explain why criminals need DNS i.e.:

- Phishing/spam, obviously one of the most prominent use

- Malware distribution and control

- Fraud

- Targeted attacks (DNS exfiltration)

- And more recently cryptomining

To perform these activities criminals can either buy domain names or take them over. Since last year, SWITCH is seeing a raise in the number of .ch domains bought to perform malicious activities vs the number of compromised domains. The reasons for this are multiple but Michael mentioned the small cost of a .ch domain (less than 6€) and the fact that after the end of SWITCH monopoly position on the market it is now possible to buy .ch domain names from everywhere on the planet even from resellers of resellers of registrars.

Now what can SWITCH do, as a registry, against these activities? This is now legally based on the VID, the Verordnung über Internet-Domains (Ordinance about Internet Domains). In case of malware, phishing, DNS compromise or illegal content, it gives SWITCH the following rights:

- Suspension (for 5 days that can be extended up to 30 days under given circumstances)

- Deletion (if no answer from the domain holder after 30 days)

- Sampling

- Sinkholing (for existing or new domains, useful in case of malware with domain name generators for Command and Control servers)

After these premises, it was time for some examples of Take:

- Wave of malicious reports when a critical CMS flaw is published (WordPress update for example)

- Domain hijacking on the registrar’s side (2017, Several Gandi domain names hijacked to spread malware, link)

- Domain delegation hijacking (2016, NZZ website unavailable due to domain renewal oversight, link)

- Subdomain hijacking (link)

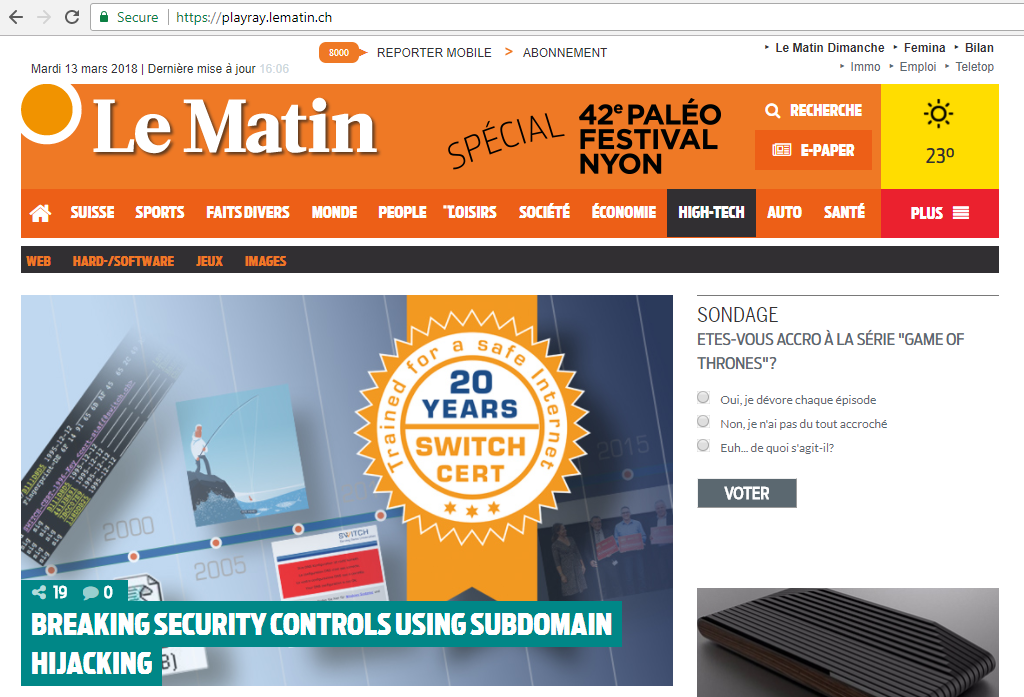

This last one was followed by a live example of the website https://playray.lematin.ch that had been temporarily redirected to a copy of the website showing an article about subdomain hijacking as the headline:

This was followed by two examples of “Buy”:

- Lookalike domain names for credentials phishing, example of ricardo.ch platform and domain names like autoricardo-opel.ch

- Reuse of recently expired domain names to gain some trust and some visitors and buy something else (fake shoes), see also the @wiederfrei twitter account

The conclusion outlined that everything starts with a DNS request on the Internet, that DNS carries the user’s trust and that if DNS is broken, almost everything else is. It emphasised the importance of protecting oneself by:

- Knowing your registrar

- Knowing your DNS and WEB hoster

- Knowing your registry

- Using 2FA (available at most registrars nowadays)

- Raising awareness with the employees responsible of DNS

- Sanitizing your DNS zone and servers

- Using DNSSEC

- Paying your registrar’s invoice :)

Abusing Android In-app Billing feature, Jérémy Matos, slides

For this talk, Jérémy presents a way to abuse the In-app billing feature of Android. This feature allows developers to easily sell content in their application. All the payment is handled by Google and no credit card data is exposed to the application itself, of course a percentage of each transaction goes to Google for this service.

A good part of the talk shows in a live demonstration (kudos for this !) how to exploit this on the game PandaPop to gain free in-game credits. This game was an example of bad code decisions, there was no code obfuscation, debug code was left in the production application and a test feature was enable for the production. This allows for easy debugging using standard tool like Genymotion to emulate the device and jadx to decompile and read the sourcecode of the application. Then, due to the code logic it is possible to write a simple hook that changes the identifier of the purchased item to a test value. This test value is sent to Google services and accepted as described in their documentation. Although this is documented it is a questionable choice to allow for such a test feature to survive in the production environment. Once the hook is written it can be installed on the rooted device using Xposed framework or adapted directly on the bytecode of the original application to allow for use on any non-rooted phone.

Although this particular way of exploiting the application was possible due to coding errors it is possible to bypass any validation that is made on the client side. This was outlined by Jérémy as an important take-home message. If you want to use the in-app billing feature you should sell content that cannot be easily predicted, otherwise it is always possible to hook the server’s response to a call, and trick the application into believing that the purchase was successful. A good example would be to sell additional levels to a game. Those cannot be predicted by the player.

Recommendations are made to obfuscate the code when possible, it won’t stop a reverse engineer but will slow down the attack. After talking briefly about the multiple unsuccessful attempts that were made to responsibly disclose this issue to the game developers and to the company that sells the framework on which the game is built, the conclusion outlines the importance to consider client-side code as untrusted and to validate security decisions on the server.

SAP Incident Response, real life examples on how to attack and defend, Jordan Santarsieri

SAP deals with business critical information and organizations depend on it: “It’s where the money is!”. With this statement, we already grasp the interest of SAP as a target for an attacker, unless you have a SCADA system, SAP is most likely going to be targeted by attackers was another way to put it. A brief explanation of various SAP systems and how Netweaver is the framework over which SAP is built, the talk goes on with 3 live demonstrations based on real life cases that were anonymised. Please forgive any imprecision in the retranscription of the demonstration, the small font and low contrast made it impossible to clearly see what was shown on the screen and I’m quoting from memory here.

The first case was a hacked company where someone hacked a privileged user account and escalated on the workstations and servers of the whole company. Upon noticing this, the company created a “white room” where only reinstalled technology was allowed. The main question was to know if SAP had been breached because in that case the enterprise would have had to go public about the incident. To reach this conclusion, a copy of all SAP servers and all their audit logs were analysed using a custom Python parser and Splunk to query the data. A trace of a successful brute force attack against the DDIC account was found. After that a call to an SM80 transaction used to start the development environment was found and the usage of a procedure called RS_TESTNAME_CALL that allows to prevent any event to be added in the audit logs. At this point the conclusion was that the attacker did this on purpose to stay unnoticed and that he probably got access to the complete SAP environment.

Second case explained how a US state department was informed by law enforcement that their systems was misused to spread malware and that it was caused by a nation state actor. During the following investigation it was discovered that their SAP was exposed on the Internet and had been used as the first point of the intrusion. Once more all SAP server were copied and all logs extracted to gather all possible information. It turned out that the system was compromised using a flaw in the Invoker Servlet (CVE-2010-5326). At the time this flaw was already 5 years old and left unpatched on an Internet exposed system. The first reaction was to take down the system and install the missing patches before restarting the server. It was discovered that at least 3 different attackers had access to the system but no database access or lateral movement could be detected.

The last demonstration was based on a case where the IT security suspected that SAP had been compromised while the IT assumed it was not. A pentest and a forensic investigation was requested to know how hard it was to break into this SAP system and assess if it had already been compromised or not. Old exploits (dated 2002) could still be used against this system and can still be run against todays production systems if not correctly patched. This is because of the OPS$ authentication that is deprecated but still widely used in older SAP versions to connect to the DB. The live demonstration showed how to bypass Oracle authentication using a simple sqlplus client and recover the login and hashes of SAP users.

As a wrap-up, the following points were outlined:

- SAP forensics is complex and odds are not in your favor

- Usually logs are not available, if they are, expect terabytes of log to parse

- You can never guarantee that SAP was not compromised because of the sheer size of the SAP infrastructure

- Don’t trust the defaults, they are a source of vulnerability

A brief history of CTF, Jordan Wiens @psifertex

@psifertex is not even a current CTF player but he played 10 years of DEFCON CTF finals, is the creator of captf.com, and the developer of binary ninja. This is how Jordan Wiens presented himself to start the talk. The first part of the talk focused on the historical evolution of CTF and mainly at DEFCON, it was outlined that CTF number increased a lot in the past years. From about 20 CTFs in 2011 to 140 in 2017 all around the world (data from ctftime)!

The gamification in the CTF makes it even more fun to hack into systems and the competition makes it more thrilling. To push it further, vector35 developed a serie of games that have to be exploited to win:

- pwnadventure is text based

- pwnadventure 2 is unity based (in .Net and thus rather easy to reverse engineer)

- pwnadventure 3 uses the unreal engine (in C++ and harder to reverse)

- pwnadventure Z is a retro game working on the NES console, a super retro game

However, making a great CTF is hard. The scoring mechanism must be reliable and reflect the reality. It’s even harder for attack/defense style where you have to measure availability of the services. The scoreboards range from the old-school CRT screen display to 3D visualizations looking like minority report. This actually leverages data-driven visualization and can be used for other purposes. An example was shown where engineers compare the execution flow of two versions of a program as a 3D visualization to better grasp the differences.

Then came the part where Jordan told some stories of past CTF, here are some:

- His team once tried to set up a giant antenna to connect the CTF area to their hotel room. It failed because of the foiling on the hotel window, however, the antenna was recycled as a psychological weapon. They pretended to spy on other teams by pointing the antenna at them and making them believe they were spied on.

- During one CTF, there was a handle on the wall just next to their table. They found out it was a closet with some space and used it as a comfortable and more quiet place to work during the competition. As a janitor came by he just said “oh there is people in here” and left them here.

- Several times, tools were broken during CTF. People know what tool the other teams will use and may well use this knowledge to attack other teams. The example of libpcap/wireshark was given, libpcap contains a collection of parsers for various protocols. Parsing protocols is hard to get right and it is possible to find vulnerabilities that will crash wireshark and could even give remote access to the user’s machine. This is also why many teams develop their own tools and sometimes release them afterwards for the community.

- Almost epic:

- Once, the scoreboard was based on asterisk and the server had default credentials on the debug menu. A team tried to exploit it to gain full control of the scoring tool but broke it instead.

- A crypto backdoor implemented by the CTF organizers almost allowed getting every flag of every team by proxying their requests to other teams.

- A 0day was discovered in BSD during a CTF.

- TCP timestamps can be used to count and identify the machines behind a NAT. This could have been used to block other teams from accessing the services while letting organizers poll them for availability.

- Troll challenges

- A trustme executable was provided that would capture audio and webcam and perform several privacy invasive operations before sending the results to the control server. It would only give the flag if you trusted it to run until the end.

- One challenge was an easy challenge asking to solve algebraic expressions. It is easy to do with python’s eval() function. There were 100 levels and level 99 contained a python injection.

- The HITCON perfection challenge was meant to break tools at each level, making it harder to debug with known tools

The presentation ended on what could we as a community do to contribute:

- Contribute on initiatives like ctftime, announce new CTFs, write-up of challenges so that other can learn

- Mentor others that want to learn

- Write tools (and publish them)

- If anyone wants to revive the golden flag award @psifertex would be happy to hand over everything

Email SPFoofing: modern email security features and how to break them, Roberto Clapis

This talk lead us through the existing security features of email messaging. The background question being “how can a user make sure that an email is authentic?”

SPF

SPF aims to check that the sender server is authentic. It works by querying for a specific TXT record on the sender domain’s DNS server. The check is done on the “Return-path” header only and not on the “From” header. Issue is that the “From” header is what is displayed in all modern email programs including webmails. This makes it easier to forge emails that pass the SPF checks while displaying an arbitrary sender address.

DKIM

DKIM is a cryptographic tool that generates signatures that can in turn be verified on the destination server. However, it creates a lot of false positives because it is broken by some email mechanisms like redirections. Thus, even if the DKIM signature check fails, most providers will still let the email pass through.

DMARC

DMARC relies on SPF and DKIM and checks the “From” header and specifies what to do if the check fails. The issue here is that it was designed before cloud email hosting. It assumes that the sender IP is used only by one company and hot shared among many. If your cloud provider allows for the same external IP to be shared with others it would effectively allow those others to send emails in your name. Also if you identify the whole IP range of an AWS region as trusted sender in SPF anyone can then rent a server on this AWS region and spoof emails for your domain.

These issues were present in Office 365, their SPF records allowed about 330000 IPs in total. Among those, some were in ranges that could be rented in Azure cloud until October 2017. This was reported to Microsoft and has been fixed since then.

As an end user, there is not much that can be done to make sure that an email is genuine. You can check the text itself, read the email source and check every headers, although this is less than practical. You could also check the sender IP directly and make sure that it belongs to the company that sent the email or if other domains use this IP (with Bing’s “ip:” operator for instance). Still, none of this can truly guarantee the email authenticity and the problem stays open even after 40 years.

Leave a Reply