While not new, a self-referencing LNK file in combination with winget configuration instructions can be a viable initial access payload for environments where the Microsoft Store is not disabled.

Compass Security Blog

Offensive Defense

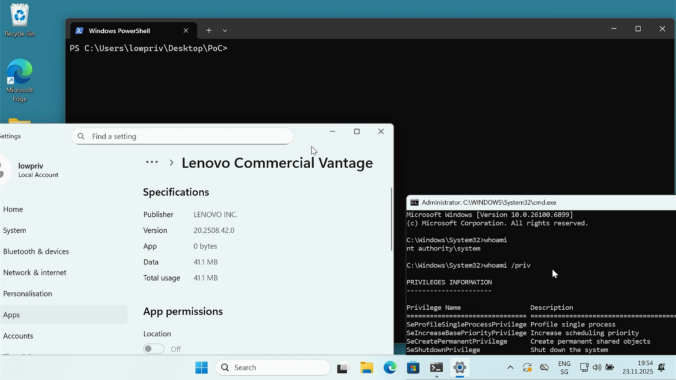

Last year we wrote about a Windows 11 vulnerability that allowed a regular user to gain administrative privileges. Not long after, Manuel Kiesel from Cyllective AG reached out to us after stumbling across a seemingly similar issue while investigating the Lenovo Vantage application. It turns out that the exploit primitive for arbitrary file deletion to gain SYSTEM privileges no longer works on current Windows machines.

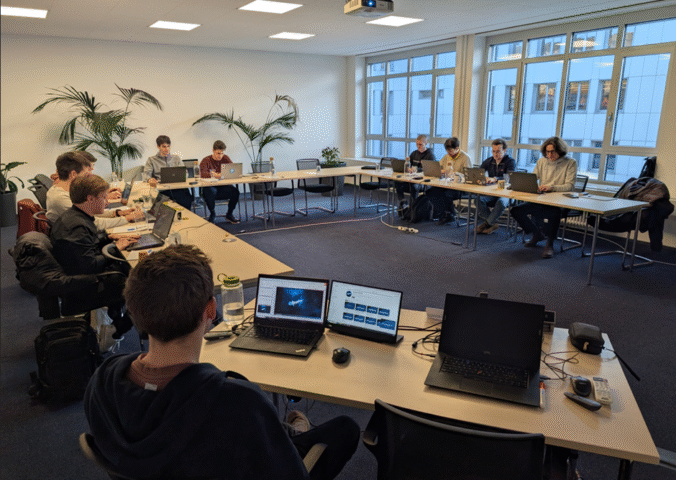

Over the course of 2025, we performed several hundred security assessments for our clients. In each of these, security analysts must understand a new environment and often work with unfamiliar technologies. Even for well-known technologies, things change rapidly. Quick learning and adaptability are essential skills.

To keep our security analysts sharp and up to date, we regularly attend security conferences, external courses and trainings but also organize internal sessions. It has become a tradition for us to spend the first week of January learning new things, starting the year improving our know-how.

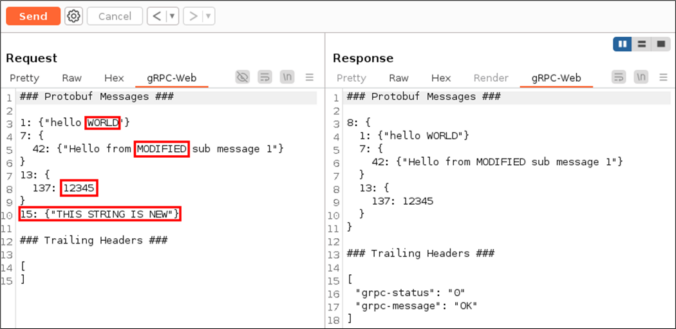

The gRPC framework, and by extension gRPC-Web, is based on a binary data serialization format. This poses a challenge for penetration testers when intercepting browser to server communication with tools such as Burp Suite.

This project was initially started after we unexpectedly encountered gRPC-Web during a penetration test a few years ago. It is important to have adequate tooling available when this technology appears. Today, we are releasing our Burp Suite extension bRPC-Web in the hope that it will prove useful to others during their assessments.

Something a bit wild happened recently: A rival of LockBit decided to hack LockBit. Or, to put this into ransomware-parlance: LockBit got a post-paid pentest. It is unclear if a ransomware negotiation took place between the two, but if it has, it was not successful. The data was leaked.

Now, let’s be honest: the dataset is way too small to make any solid statistical claims. Having said that, let’s make some statistical claims!

The Network and Information Security Directive 2 (NIS2) is the European Union’s latest framework for strengthening cyber security resilience across critical sectors.

If your organization falls within the scope of NIS2, understanding its requirements and ensuring compliance is crucial to avoiding penalties and securing your operations against cyber threats.

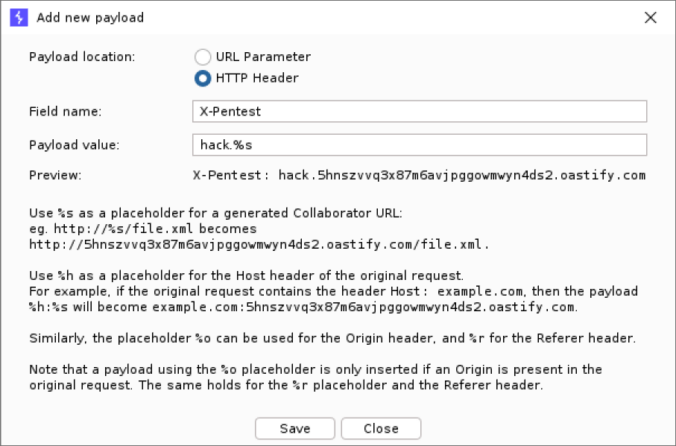

Collaborator Everywhere is a well-known extension for Burp Suite Professional to probe and detect out-of-band pingbacks.

We developed an upgrade to the existing extension with several new exiting features. Payloads can now be edited, interactions are displayed in a separate tab and stored with the project file. This makes it easier to detect and analyze any out-of-band communication that typically occurs with SSRF or Host header vulnerabilities.

Kerberos is the default authentication protocol in on-prem Windows environments. We’re launching a 6-part YouTube series, a technical deep dive into Kerberos. We’ll break down the protocol, dissect well-known attacks, and cover defensive strategies to keep your environment secure.

In a previous blog post, we explored the technical side of passkeys (also known as discoverable credentials or resident keys), what they are, how they work, and why they’re a strong alternative to passwords. Today, we’ll show how passkeys are used in the real world – by everyday users and security professionals alike.

© 2026 Compass Security Blog