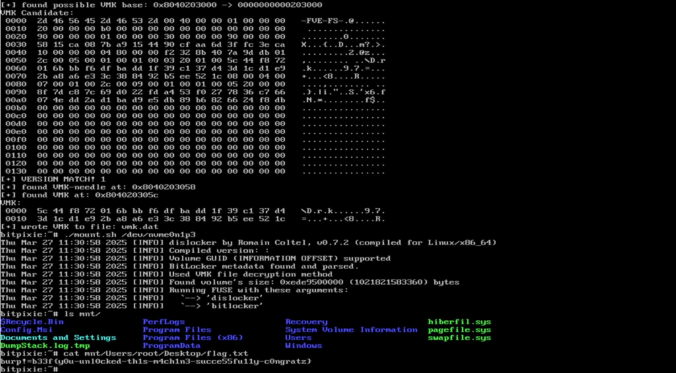

Depending on the customer’s preference, possible initial access vectors in our red teaming exercises typically include deployment of dropboxes, (device code) phishing or a stolen portable device. The latter is usually a Windows laptop protected by BitLocker for full disk encryption without pre-boot authentication i.e. without a configured PIN or an additional key file. While […]

Compass Security Blog

Offensive Defense

During Business Email Comproise (BEC) engagements we often have to analyze the provenance of emails. According to the FBI’s Internet Crime Report, BEC is one of the most financially damaging attacks, even surpassing ransomware in terms of losses. But how can we know all of this? Through email headers! This blog post tries to shed some light on the information contained within emails, what it means, and what can be done to prevent this type of attack.

The anonymous data on our cases allows us to answer the question “What is a typical DFIR case at Compass Security?” and we conclude its the analysis, containment, eradication and recovery of one or a few devices in a Windows domain which is probably no surprise :)

One of the rare cases where we can decrypt and recover files following-up with a ransomware attack.

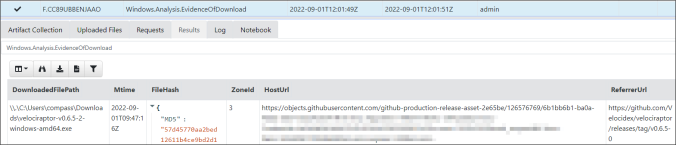

This post provides ideas of processes to follow and gives basic guidance on how to collect, triage and analyze artifacts using Velociraptor

During a DFIR (Digital Forensics and Incident Response) Case, we encountered an ESXi Hypervisor that was encrypted by the Ransomware LockBit 2.0. Suspicious SSH logons on the Hypervisor originated from an End-of-Life VPN Appliance (SonicWall SRA 4600). It turns out, this was the initial entry point for the Ransomware attack. Follow us into the forensics […]

Sometimes one goes deep down the rabbit hole, only to notice later that what we were looking for is just under one’s nose.

This is the story of a digital forensic analysis on a Linux system running docker containers. Our customer was informed by a network provider that one of his system was actively attacking other systems on the Internet. The system responsible for the attacks was identified and shut down.

Our DFIR hotline responded to the call and we were provided with a disk image (VMDK) to perform a digital forensic analysis.

We put more and more sensitive data on mobile devices. For many private conversations we use mobile applications, such as WhatsApp. This smooth access to the data and the Internet provides multiple benefits in our lives. On the other side, new attack vectors are created. Phishing messages do not need to be delivered in an […]

Intro into a Compass Splunk App, which can be used to perform a first triage and high level analysis of Volatility results coming from multiple hosts.

In this blog post we will reverse engineer a sample which acts as downloader for malware (aka a “dropper”). It is not uncommon to find such a downloader during DFIR engagements so we decided to take a look at it. The sample that we are going to analyze has been obtained from abuse.ch and was […]

© 2025 Compass Security Blog